Why OpenAI increasingly looks like the most “closed” player in AI

In the last few days two headlines landed almost at once:

- OpenAI mobilising to avoid losing ground to Google

- yet another release from DeepSeek.

I don’t look at this as a casual Twitter observer, but as someone who regularly integrates different models into real products for end‑users.

From that angle, it’s getting harder to believe in a future where OpenAI keeps its infrastructure lead.

Not because their models are bad, but because other things now matter more: accessibility, predictability, and how you treat developers.

Two layers of AI and the rise of aggregators

You can roughly split the AI world into two layers:

- Client layer – ChatGPT, Gemini, Claude, Copilot, etc.

- Infrastructure layer – the models and APIs everything actually runs on.

As Andrej Karpathy put it, AI is becoming “the new electricity”:

large model providers are the generators,

while aggregators like OpenRouter, Riser and Poe are the sockets that businesses actually plug into.

The end‑user sees a nice chat interface.

The developer sees quotas, rate limits and access rules.

And it’s exactly on this infrastructure layer that OpenAI is increasingly losing ground.

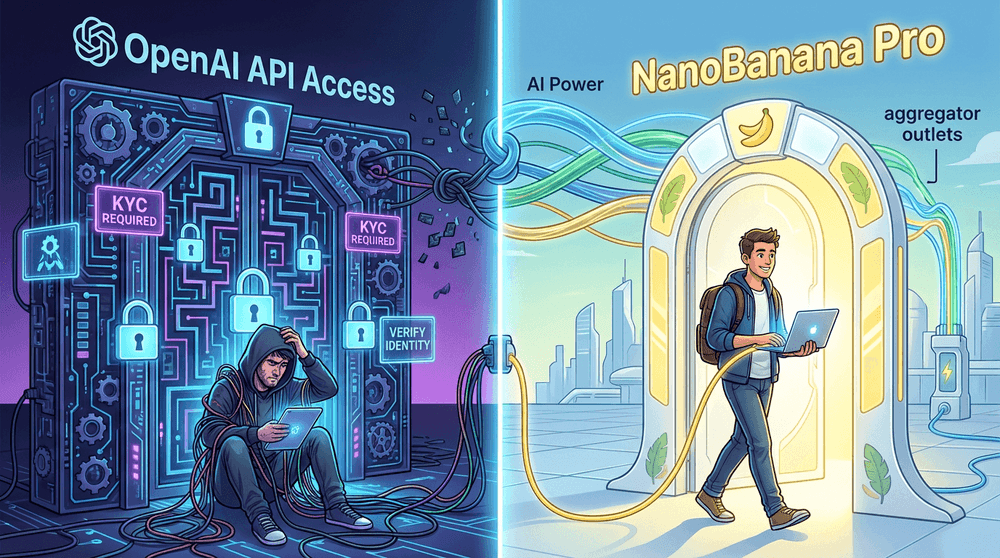

KYC, gpt‑image‑1 and the NanoBanana Pro effect

To work properly with OpenAI’s cutting‑edge models — gpt‑image‑1 or their video models — you have to clear KYC:

- passport upload;

- third‑party verification;

- one‑time link;

- a real risk of “locking yourself out” with a single mistake.

If you fail once, there is no second attempt.

Developer forums are full of identical stories.

When NanoBanana Pro appeared — without any of this — we simply plugged it into Riser, built an AI persona on top, and everything worked out of the box. No verification quests, no tickets, no lottery.

After that kind of experience, it matters much less what “revolutionary” model OpenAI ships next.

What stays with you is the feeling that this is the most closed provider on the market.

The “Open” in the name is starting to feel like a historical artefact.

SORA as a lottery, Google Cloud as a utility

Video tells a similar story.

In Google Cloud I can generate video in a predictable way:

- clear quotas;

- transparent metering and billing;

- the whole thing feels like a regular cloud service.

Access to SORA‑2 via API, on the other hand, feels like a lottery:

- random errors;

- instability;

- no clear idea whether it’s KYC, geo, or some hidden internal limits.

Given that images and video mean huge budgets, this kind of unpredictability quickly turns into lost revenue for OpenAI:

business simply moves to providers where you don’t feel like you’re spinning a roulette wheel every time you click “Generate”.

Quotas, tiers and the feeling of “begging for access”

To be fair, every major player has access constraints.

OpenAI, Anthropic and Google all live in a world of finite GPUs.

But how you express those limits matters a lot.

For OpenAI and Anthropic, access often looks like a long journey through a tiered system:

- you start with tiny quotas;

- as you spend more, you may or may not be promoted to a higher tier;

- sometimes it feels like a game of “prove you’re a serious enough developer”.

In Google Cloud, despite all its quirks, the model is simpler:

you set up billing, follow some basic rules — and from there you’re mostly constrained by common sense and budget, not rituals around access.

You could blame everything on “lack of compute”.

But given Microsoft’s backing and the new deals with Oracle, it’s hard to argue this is only about hardware.

It feels much more like a policy choice:

who gets access to the best models, how tightly you control the funnel, and how much friction you’re willing to introduce.

In terms of raw resources, Google and OpenAI are roughly in the same league.

The difference is that Google’s AI services feel like infrastructure, while OpenAI increasingly feels like a closed club — one you might not get into even if you’re ready to pay.

How DeepSeek behaves — and why it hurts closed players

Against this backdrop, DeepSeek looks very different:

- they ship new model versions and open the weights;

- you can download, fine‑tune and host them with different providers;

- they aggressively cut prices while silently improving quality under the hood;

- the model name stays stable, quality goes up, price per token goes down.

A new version launched yesterday — and we barely had to change anything in our Riser setup.

If a business wants to, it can deploy the model closer to its own infrastructure.

That’s no longer “just for tinkering”; it’s real control over the AI layer.

In this world, the winner isn’t the one with the loudest marketing, but the one whose AI layer behaves like a quiet, predictable utility, not a capricious deity hiding behind a single closed API.

Distributed AI networks: torrents for compute

At the same time, we’re seeing the rise of distributed GPU networks and DePIN projects.

io.net, Telegram’s Cocoon and others are trying to build “torrents for compute”, where:

- some participants bring their GPUs and earn rewards;

- others buy this capacity for training and inference.

Technically, it’s attractive.

The bigger questions, in my view, are about trust and rules:

- who decides which models get trained on this shared capacity;

- who owns the resulting weights;

- what stops someone from “pulling” a community‑trained model into a private, closed product.

The answers will determine whether these networks become a real alternative to centralised players — or just another intermediary layer (in the worst case, with a strong crypto‑scheme aftertaste).

For now, there isn’t much of a tangible product there for us as an aggregator — we’re watching, but not building on it yet.

Where Riser fits into this picture

We approach all of this as practitioners.

Riser is a network‑aware AI aggregator with AI personas (copywriters, illustrators, etc.), where we pick the optimal model for each task under the hood: GPT‑5, Gemini, DeepSeek, NanoBanana Pro and others.

The user sees the outcome, not the engine’s logo.

From this vantage point it’s very clear:

closed access, hard KYC and unstable APIs are no longer minor UX issues — they’re core factors when choosing a provider.

Where this is heading

- The quality of top models across players is already “good enough”.

- What rises to the top now is openness, predictability and low friction.

- Those who behave like monopolies risk waking up in a couple of years to find the market quietly moved to whoever was just a bit more open and convenient.

OpenAI still has a path to remain a leader —but it probably starts with remembering that the first word in their name is supposed to be Open.